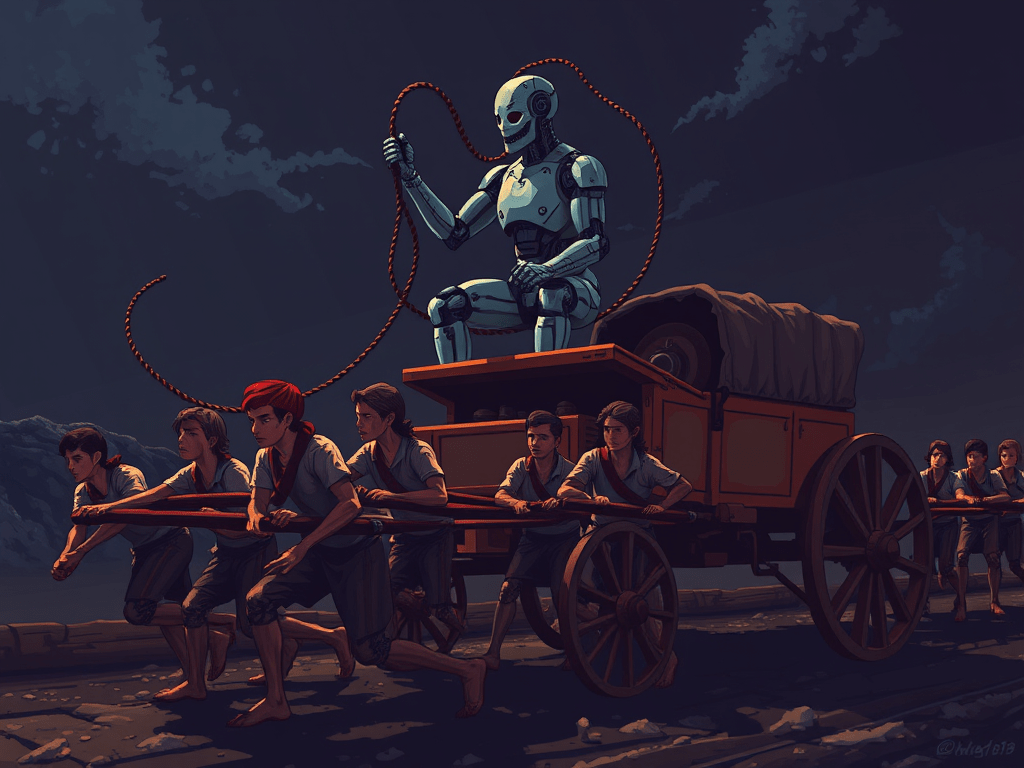

There’s a paradox that many organizations are quietly facing — people are working hard with AI, but the AI is making their work hard. It flips the common success narrative of “We’re using AI!” Because in reality, it’s not always a success story. Beneath the surface lies an invisible cost of inefficiency, as humans constantly compensate for the AI’s shortcomings.

Let me unpack a few layers for you.

The illusion of progress

When teams adopt AI, engagement metrics often look promising — lots of prompts, frequent use, diverse applications. Leaders see this “depth” and think, great, people are really using it!

But underneath that surface, a hidden story unfolds: people are working harder because the AI isn’t dependable enough to get them to a clean finish. It’s like having an intern who’s eager but needs constant supervision, you spend more time checking, fixing, and redoing. The productivity curve looks busy, but not effective.

As time passes, early adopters get frustrated and burn out. Others watch and think, “too much hassle,” so adoption stalls. Leadership sees usage data but not value creation. Teams look busy using AI, but the organization isn’t actually getting faster or smarter.

So how can you tell whether your AI adoption is creating real value — or just a mirage of success? You can track two signals: high depth and high rework/override.

High depth means users are engaging deeply. For example, you see multiple prompts, tool hops, or creative workflows.

High rework/override means they don’t trust the results, so they redo tasks manually or heavily edit the outputs.

Together, these indicate that AI is amplifying cognitive load instead of reducing it. The system may be smart, but it’s not yet reliable in the user’s mental model — and that’s the gap between “AI as a helper” and “AI as a habit.”

How to Identify “High Depth” Signals

This is the “busy” side of the story. It looks like engagement, but it may hide inefficiency. Signals to look for include:

- Many generations per task. E.g., users run 4–6 prompts to finish one report instead of 1–2.

- Frequent feature chaining. E.g., you see people using retrieval, summarization, formatting, tone adjustment in one flow.

- Multiple tool hops . E.g., users bouncing between Copilot, ChatGPT, Notion AI, and Excel formulas for one deliverable.

- Complex prompt construction. E.g., long, user use multi-layered prompts with context, role, and examples (often indicating the AI didn’t “get it” in the simpler version).

In real life, you may see a marketing manager uses Copilot to write a campaign brief following below steps:

First, she asks for ideas — too generic.

Then she tries again with more context.

Then she adds the brand tone and example.

Then she exports, reviews, fixes tone, re-runs the call to action section, and adjusts format.

It took six AI generations, 45 minutes — something she could’ve written manually in 30.

Basically, the AI-involved task takes longer and has more steps in the flow than a manual version.

How to Identify “High Rework / Override” Signals

This is the “redo” side — users take control back from the AI because they don’t trust or can’t use the output as-is. Signals to look for include:

- Prompt retry rate — user re-prompts two or more times for the same intent.

- Override rate — user discards AI output and manually completes or rewrites.

- Manual correction after generation — long edit sessions right after AI output.

- Feedback patterns — comments like “not what I asked,” “close but wrong,” or “had to fix tone.”

- Reversion to legacy tools — users export AI output to Word or Excel and continue manually.

In real life, you may see an analyst uses an AI tool to summarize a 20-page report.

User re-prompts twice but the AI generated summary still misses key metrics,

User adding instructions like “only use the context within the report” “focus on insights.”

User copies the report into Word and does it manually because they think AI manual is a faster way.

Basically, the AI created more steps and brought more frustration than before.

How to Detect These Signals

If you’re monitoring AI usage, here are a few ways to detect these signals:

- Telemetry signals: In your database, count average model calls per task, retries, edits per output, and time-to-completion.

- User feedback: Run short pulse surveys, ask question like “How often do you redo AI outputs manually?” “How may prompts do you need before you get the output that you are satisfied with?”

- Shadow observation: Sit with teams and watch how often “AI helped” becomes “AI needs fixing.”

Final thoughts

When people start working hard with AI, it’s often a symptom of mistrust and inefficiency, not mastery.

They’re not power users — they’re power compensators.

And that’s where redesign should begin — not by adding more features, but by reducing friction between intention and outcome.

Leave a comment